I19 IPFS Disassembly

After pursuit of I06 IPFS Rewrite we have a working prototype using IPFS, but are seeing complications with the IPFS library itself, and in particular its networking facilities, and so we believe destructuring IPFS down to its core components will allow us to achieve our goals from I06 as well as some secondary goals that were deemed too hard for now.

As anticipated in I06 our design is a seamless fit for the IPFS data layer and this usage is Requested to be factored out in R24 IPFS ORM, but our network layer deviates subtly and irreconciliably from IPFS in 3 ways:

- IPFS favours small sets of files that grow slowly

- IPFS intends a single open permission level for all

- IPFS presents a stable interface to its consumers

The conclusion we reach is that IPFS is designed specifically for Files, where the number of files is expected to be small, and the rate of change of those files even smaller. IPFS the library represents these architectural aspirations, and implements them by bundling together modules, then dulling down the interface of each individual module to present a stable and unified whole package interface. The IPFS library also includes several other services that run by default, which we need to intercept and turn off for our purposes, such as MFS and Preload.

IPFS is not where the team solves problems - they solve problems in the individual bundles, then they package it up into IPFS as a single exemplary use case.

Conceptually if what IPFS provides is akin to a filesystem, then what we are providing is akin to ram and the structures therein, such as heap, stack, and swap space. The chatter of this activity is too great to write to disk in realtime, and so we need our own layer to handle this. There is definitely a lot of overlap, such as loading of executables and swapping out stale pieces to disk, but the high frequency nature warrants a tuned design.

Here are the identified deviations in more detail:

IPFS favours small sets of files that grow slowly

The primary difference that distances us from the goals of IPFS is the rapid rate of Pulse generation that we have.

We generate Pulses that are always assosciated with the same chainId, and higher still, with the same AppRoot. We aim to use this 'grouping' nature to reduce network traffic and peer discovery times, whereas IPFS offers no such grouping. For a particular Pulse to be discoverable on IPFS would require us to continually publish and then republish each of those Pulses to the IPFS DHT every 24 hours at minimum. This number of updates would result in throttling against our nodes. IPFS offers discovery of a random hash - but it requires regular network effort to keep this offer open. We offer discovery of an approot, then an address, and from there an individual Pulse, with each level of scoping vastly reducing the traffic required for lookup to be lively. Without this scoping, our offers would be too expensive to maintain.

IPFS intends a single open permission level for all

To this goal, IPFS tries to reach out and contact the main network with data all the time. It also makes itself available to receive requests. This can be catastrophic for confidential information. Our initial plan to provide generic permissions for IPFS using content lookup was naive. Due to the high rate of Pulse generation that we have, broadcast and discovery needs to be made specific to our use case - so too we need a permissions scheme specific to our model, as confidentiality must be absolute, and without exception.

The risk of leaking information using IPFS directly seems too great, and gets worse as years go by. Controlling our networking directly vastly reduces this risk.

IPFS presents a stable interface to its consumers

Whilst IPFS is made up of many smaller reusable modules, the library strives to provide a stable and standard interface to its consumers. This means that many of the interface functions on the components that it packages up are not available to us directly. We found we were often attempting to perforate this packaging, reach inside to the components, and make modifications to suit our purposes.

It is far cleaner to be using the modules directly for our use case, rather than bending the IPFS library when its purposes are actually different to what we need. We get the full interface, as well as the latest updates, and we can submit pull requests directly to these libraries to fix our specific problems.

Utilized Components of IPFS

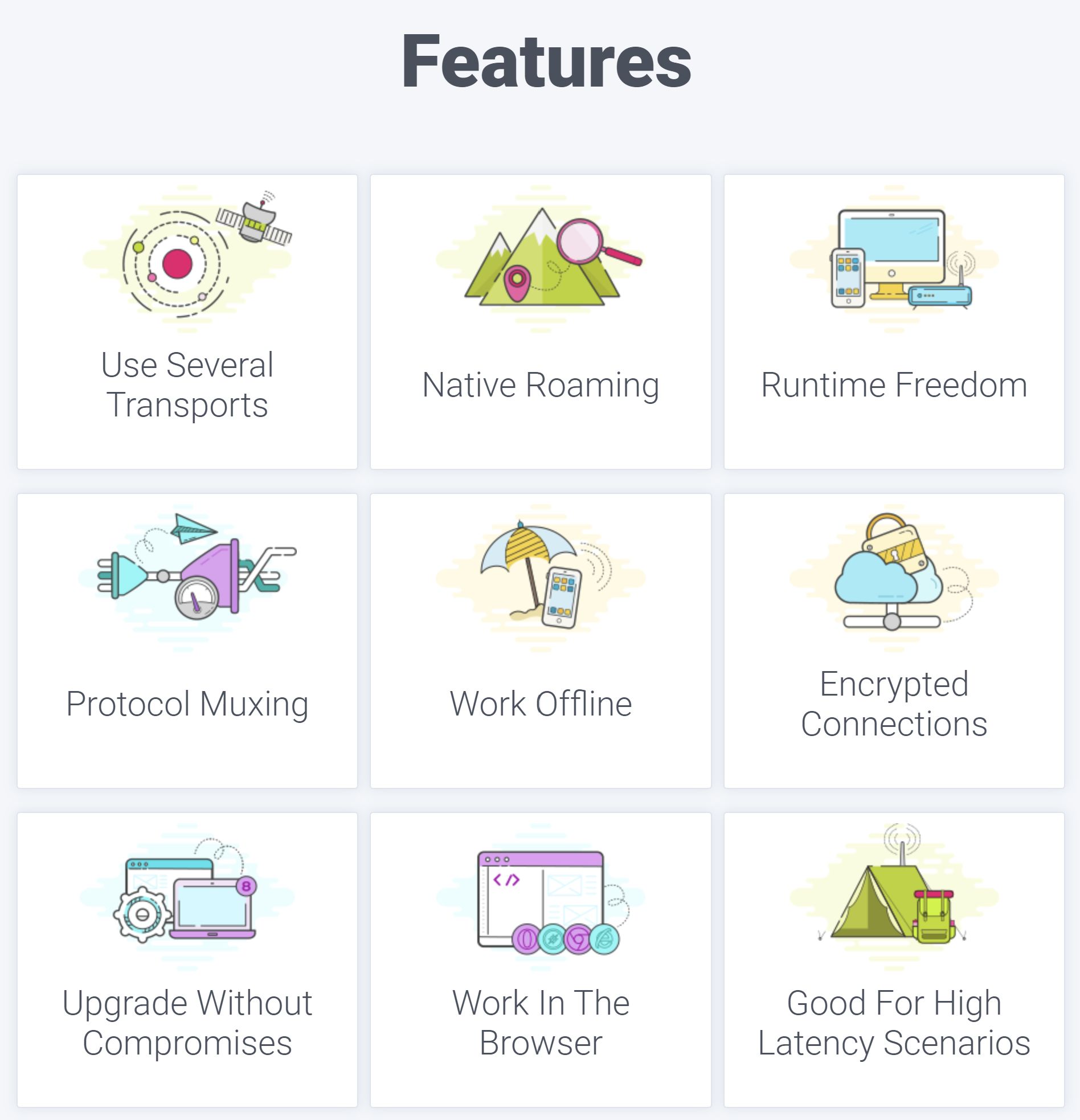

As can be seen from the feature list of libp2p below, their features describe exactly the properties we want from our networking module:

This is the list of modules and their purposes that we intend to pull from IPFS:

DHT from @libp2p/kad-dht

- Find peers based on a chainId, with permissions

- Find peers based on the chainId of an approot, with permissions

- Find CIDs of the

latestpulse of a chain based on its chainId, with permissions - Remote Validators to store new Interpulses that are available

PubSub from @chainsafe/libp2p-gossipsub

- Broadcast realtime updates for CIDs of the

latestpulse of a chain based on its chainId, with permissions - Validators to share the latest pulse with each other

- Remote Validators to notify of new Interpulses that are available

Repo from ipfs-repo

- Storage of data, config, and cryptographic keys securely to disk

Bitswap from ipfs-bitswap

- Provide and fetch blocks of data from connected peers, with permissions

To preserve privacy, we would run one instance of bitswap per chain permission group we were part of. Or, possibly we could modify the decision engine to look up permissions.

Bitswap is always initiated as a result of notifications thru pubsub, or lookups from dht.

Privacy within chains

By taking control of the networking streams directly, we can implement privacy restrictions specific to us, rather than trying to solve this generically for all of IPFS. This idea would list within a chain which public keys are allowed to access the chain, and by requiring each node to authenticate using their node key to each other, each node can check permissions before bitswap to send blocks of data to the requesting node.

This may require some extra effort to allow chains of chains to have access, but these seem logical to implement.

Compatibility with IPFS

Taking this approach will remain fully compatible with IPFS, but to allow data to be browseable on the public IPFS network will require some additional coding effort. Our initial clients do not need this functionality and so this work is deferred, but we share the exact same data formats and the exact same networking protocols as the IPFS network.

Periodically, if a chain is public, we will publish to public IPFS and provide for the hash of the latest approot. This would be navigable by any generic IPFS browser to see the latest tree of the chain. It would also allow the history to be walked provided the client stayed connected to the provider node, which it normally would do.

Build plan

Using the IPFS prototype as the base guide, copy the implementation in IPFS to make our own dedicated libp2p networking module, with tests on each discrete piece of functionality and benchmarks.

Run with a single closed group of permissions, refusing connections from any peers who are not already entered into the system

Build up functionality to be able to process the content we store to determine permissions to access that content.

Ultimately host our own public node and experiment with browser server functionality, achieving the long sought after Announce capability so natural to web 2.0 applications.